Escaping Prototype Purgatory: A Playbook for AI Teams

We’re living through a peculiar moment in AI development. On one hand, the demos are spectacular: agents that reason and plan with apparent ease, models that compose original songs from a text prompt, and research tools that produce detailed reports in minutes. Yet many AI teams find themselves trapped in “prototype purgatory,” where impressive proofs-of-concept fail to translate into reliable, production-ready systems.

The data backs this up: a vast majority of enterprise GenAI initiatives fail to deliver measurable business impact. The core issue isn’t the power of the models, but a “learning gap” where generic tools fail to adapt to messy enterprise workflows. This echoes what I’ve observed in enterprise search, where the primary obstacle isn’t the AI algorithm but the foundational complexity of the environment it must navigate.

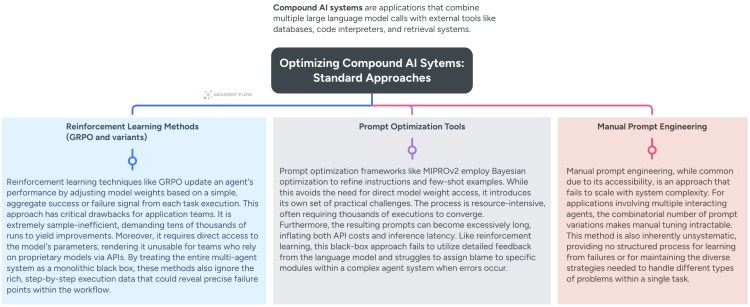

This is magnified when building agentic AI. These systems are often “black boxes,” notoriously hard to debug, whose performance degrades unpredictably when faced with custom tools. They often lack memory, struggle to generalize, and fail not because of the AI’s intelligence, but because the system around them is brittle. The challenge shifts from perfecting prompts to building resilient, verifiable systems.

What makes this particularly frustrating is the thriving “shadow AI economy” happening under our noses. In many companies, employees are quietly using personal ChatGPT accounts to get their work done. This disconnect reveals that while grassroots demand for AI is undeniably strong, the ambitious, top-down solutions being built are failing to meet it.

The Strategic Power of Starting Small

In light of these challenges, the most effective path forward may be a counterintuitive one. Instead of building complex, all-encompassing systems, AI teams should consider dramatically narrowing their focus — in short, think smaller. Much smaller.

This brings us to an old but newly relevant idea from the startup world: the “wedge.” A wedge is a highly focused initial product that solves one specific, painful problem for a single user or a small team, and does it exceptionally well. The goal is to deploy a standalone utility — build something so immediately useful that an individual will adopt it without waiting for widespread buy-in.

The key isn’t just to find a small problem, but to find the right person. Look for what some call “Hero users” — influential employees empowered to go off-script to solve their own problems. Think of the sales ops manager who spends half her day cleaning up lead data, or the customer success lead who manually categorizes every support ticket. They are your shadow AI economy, already using consumer tools because official solutions aren’t good enough. Build for them first.

This approach works particularly well for AI because it addresses a fundamental challenge: trust. A wedge product creates a tight feedback loop with a core group of users, allowing you to build credibility and refine your system in a controlled environment. It’s not just about solving the cold-start problem for networks — it’s about solving the cold-start problem for confidence in AI systems within organizations.

From Passive Record to Active Agent

AI teams also need to appreciate a fundamental shift in enterprise software. For decades, the goal was becoming the “System of Record” — the authoritative database like Salesforce or SAP that stored critical information. AI has moved the battleground. Today’s prize is becoming the “System of Action” — an intelligent layer that doesn’t just store data but actively performs work by automating entire workflows.

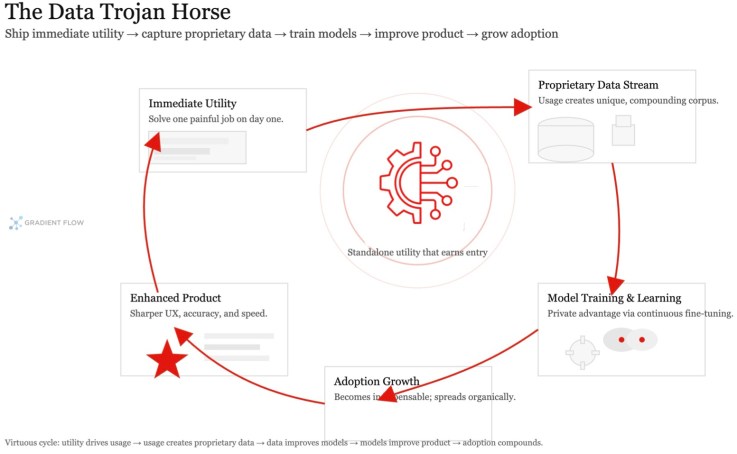

The most powerful way to build is through what some have called a “Data Trojan Horse” strategy. You create an application that provides immediate utility and, in the process, captures a unique stream of proprietary data. This creates a virtuous cycle: the tool drives adoption, usage generates unique data, this data trains your AI, and the enhanced product becomes indispensable. You’re building a moat not with a commoditized model, but with workflow-specific intelligence that compounds over time.

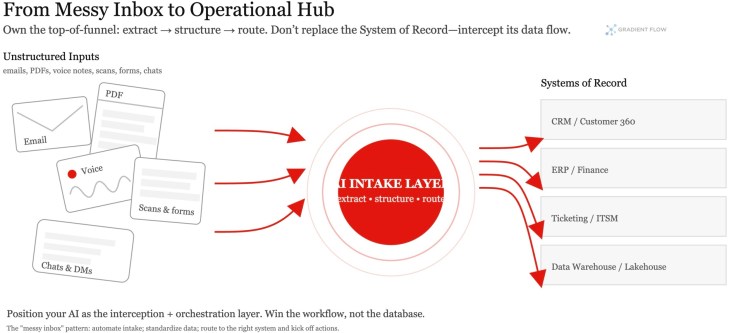

A concrete example is the “messy inbox problem.” Every organization has workflows that begin with a chaotic influx of unstructured information — emails, PDFs, voice messages. An AI tool that automates this painful first step by extracting, structuring, and routing this information provides immediate value. By owning this critical top-of-funnel process, you earn the right to orchestrate everything downstream. You’re not competing with the System of Record; you’re intercepting its data flow, positioning yourself as the new operational hub.

Look at a company like ServiceNow. It has positioned itself not as a replacement for core systems like CRMs or ERPs, but as an orchestration layer — a “System of Action” — that sits on top of them. Its core value proposition is to connect disparate systems and automate workflows across them without requiring a costly “rip and replace” of legacy software. This approach is a masterclass in becoming the intelligent fabric of an organization. It leverages the existing Systems of Record as data sources, but it captures the real operational gravity by controlling the workflows. Defensibility is gained not by owning the primary database, but by integrating data from multiple silos to deliver insights and automation that no single incumbent can replicate on its own. For AI teams, the lesson is clear: value is migrating from merely holding the data to intelligently acting upon it.

Building for the Long Game

The path from prototype purgatory to production runs through strategic focus. But as you build your focused AI solution, be aware that platform players are bundling “good enough” capabilities into their core offerings. Your AI tool needs to be more than a wrapper around an API; it must capture unique data and embed deeply into workflows to create real switching costs.

By adopting a wedge strategy, you gain the foothold needed to expand. In the AI era, the most potent wedges capture proprietary data while delivering immediate value, paving the way to becoming an indispensable System of Action. This aligns with the core principles of building durable AI solutions: prioritizing deep specialization and creating moats through workflow integration, not just model superiority.

Here’s a tactical playbook:

- Embrace the single-player start. Before architecting complex systems, create something immediately useful to one person.

- Target Hero users first. Find influential employees already using shadow AI. They have the pain and autonomy to be your champions.

- Find your “messy inbox.” Identify a painful, manual data-entry bottleneck. That’s your wedge opportunity.

- Design for the virtuous cycle. Ensure everyday usage generates unique data that improves your AI’s performance.

- Become the System of Action. Don’t just analyze data — actively complete work and own the workflow.

- Choose reliability over capability. A simple, bulletproof tool solving one problem well earns more trust than a powerful but fragile agent attempting everything.

The teams who succeed won’t be those chasing the most advanced models. They’ll be the ones who start with a single Hero user’s problem, capture unique data through a focused agent, and relentlessly expand from that beachhead. In an era where employees are already voting with their personal ChatGPT accounts, the opportunity isn’t to build the perfect enterprise AI platform — it’s to solve one real problem so well that everything else follows.

The post Think Smaller: The Counterintuitive Path to AI Adoption appeared first on Gradient Flow.